PROBLEM LINK:

Setter: Constantinescu Andrei Costin

Tester: Misha Chorniy

Editorialist: Taranpreet Singh

DIFFICULTY:

Medium

PREREQUISITES:

Greedy, Heavy-Light Decomposition, Segment Tree with lazy Propagation.

PROBLEM:

Given N lights connected in form of a tree, we are to perform M updates involving flipping lights of two houses. After each query, we try pairing all lights. Print the minimum sum of the distance between each pair we can achieve by optimal pairing after each update.

QUICK EXPLANATION

- Each edge may be included at most once because if included more than once, we can always switch pairs to get this edge excluded by a factor of 2.

- This way, if we flip all edges from included to excluded and vice versa on the path of two houses, the answer is the number of included paths.

- This can be done using Heavy-Light decomposition combined with Segment tree with Lazy Propagation to perform range xor query.

EXPLANATION

Let us the same problem for a chain instead of a tree.

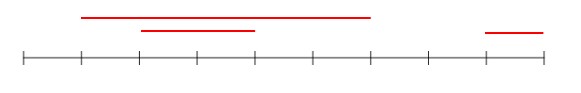

Consider example. Total 10 houses with house 3, 5, 9 and 10 having their lights on. The minimum sum of distances between pairs is 3. Suppose we get an update to flip lights of house 2 and 7. Now, the pairs shall be (2,3), (5,7), (9,10), as can be seen in the image.

We can see, that is is never optimal to keep an edge included more than once in any pairing. Also, notice that the range [2,7] just got flipped due to update.

Coming back to trees. Assume among all pairs, we have two pairs (a,b) and (c,d) such that paths from a to b and path from c to d pass through edge e with endpoints u and v. We can see, that since both paths cross this edge, one endpoint of each pair lie on one side while another endpoint on another side. So, it is better to pair nodes on each side to themselves. It can be seen, that the sum of the distance between pairs has reduced by two times the number of edges shared in the path between two pairs. Hence, optimal solution, trying to minimize the sum of distance, shall never have any such pair of pairs which have a shared path. This implies, whenever we have an update (a,b), we can just flip included to excluded and vice versa for all edges on the path from a to b. The answer is just Number of included edges.

An example can be found in secret box

Click to view

Consider tree formed by edges 1-2 1-3 1-4 2-5 2-6. Suppose houses 3,4,5 and 6 have their lights on. Then, pairs (3,4) and (5,6) are optimal. Pairing ((3,5),(4,6)) and Pairing ((3,6),(4,5)) is not optimal and both include shared path from 1 to 2.

This was all for the idea, coming to implementation. Basic knowledge of Heavy Light Decomposition is a must.

See, we basically divide tree into at most \lceil logN \rceil chains, such that we can perform range update over all vertices in time less than the number of vertices in a chain. This way, whenever we have an update, we split the update path into parts such that each sub-path belong to one chain. Each sub-path is just the same as solving the problem on a chain, as explained above. So, we are left with solving this problem for a chain. This is a well-known problem and can be found here.

Apart from that, some users face difficulty in handling edges in Heavy Light Decomposition, because an edge is represented by a combination of vertices. A very simple and neat way to tackle this would be as follows.

We have rooted the tree at any vertex, say r. Now, all vertices except r shall have exactly one edge which leads from that vertex to its parent. This is it. We represent edge from a vertex to its parent by that vertex. So, we can translate heavy light decomposition on edges to heavy light decomposition on vertices by modifying our implementation a little.

When updating path from a to b having LCA c, update all nodes on the path from a to c except c, and on the path from b to c except c. This way, we can now use heavy light decomposition on vertices to solve this problem. Details can be found in implementations below.

Learning Material

Heavy Light Decomposition here and here are good enough with nice practice problems.

For Segment tree with Lazy Propagation, this blog is nice. Practice problems can be found here.

The proof we used above, is actually n example of exchange argument used for proving/disproving optimality of any greedy solution. Read more about it here.

Time Complexity

Time complexity is O(N*logN+M*log^2N), O(N*logN) per chain and the total number of chains being at most logN. O(N*logN) preprocessing for LCA finding using binary lifting or Euler tour.

AUTHOR’S AND TESTER’S SOLUTIONS:

Setter’s solution

Tester’s solution

Editorialist’s solution

Feel free to Share your approach, If it differs. Suggestions are always welcomed.